AR100

AR100 is an OpenRISK 1000 implemented in a range of Allwinner SoCs. This page is dedicated to documenting the use of the AR100 for real time applications. Real time in this sense means predictable latency on the control of GPIO. The goal is to run a regular Linux kernel on the standard ARM based cores (CPUX) and use a shared memory region to transfer data from the CPUX to the AR100 core (CPUS).

Contents

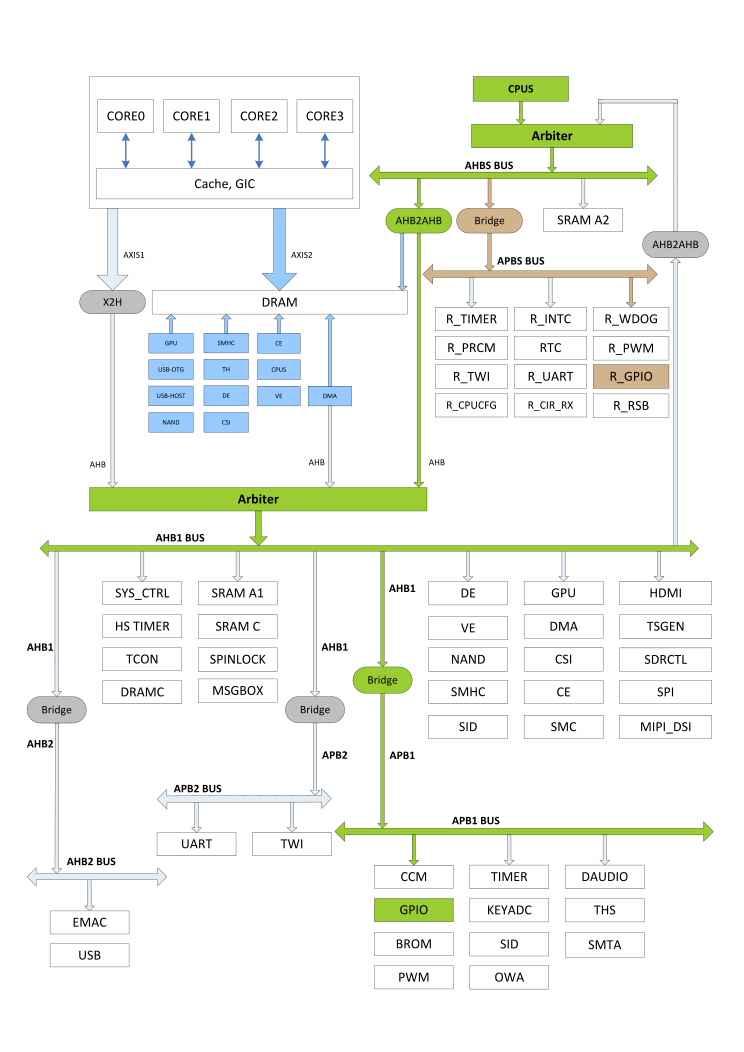

Overview of the A64 system

The overview shows the critical path that the AR100 needs to take in order to set registers in a GPIO bank (marked in green). In that path there are two Arbeiters that can cause unpredictability in the chain. It is not known at this point how the arbiters work and the worst case delay caused by the arbiter.

Also seen in the overview is the R_GPIO (marked in brown). This is a GPIO bank that is dedicated to CPUS. The access latency is less than what the GPIO bank is, but since the TWI (I2C) bus used by CPUX to communicate with the PMIC is located on the same bus, Linux uses this bus to poll for changes in the PMIC. If the all bandwith is used by the CPUS, this blocks the CPUX from accessing the R_TWI which in turn causes errors in Linux.

One option to remove the need for CPUX to access the R_TWI peripheral is to use a different I2C bus for the CPUX-PMIC communication. Using TWI0 with pin PH0/PH1 an the A64 might remove the need for CPUX to access any of the R_ peripherals.

A second option is to let AR100 and Linux share access to the bus. It should be very seldom that Linux needs to change anything, especially during a print. Once the voltage levels are set, which happens during boot, not much should have to change.

GPIO toggling from CPUS

Speed comparison between PIO and R_PIO

The R_PIO is a bit faster than the PIO. A comparison shows that R_PIO uses about 13.3 ns to set the GPIO pins while PIO uses 60 ns. This corresponds to 4 instruction cycles for the R_PIO to set a new value for the pio bank. Similarly, 60 ns corresponds to 18 instruction cycles. The picture below shows the timing on R_PIO running an infinite loop setting the pin high and then low. File:SDS5034X PNG 71.png The picture below shows the same thing done on PIO. File:SDS5034X PNG 72.png

Predictability/effect of arbiter

Due to the shared AHB bus between the CPU and CPUS (AR100) there is a potential for a crash when both CPUs are trying to access resources. Therefore the A64 has an arbiter to manage access to the bus by both CPUs. This can cause latency unpredictability for the CPUS. A test was set up with continuous writing to the PIO. At the same time an "apt update" was performed in order to generate traffic from the main CPU on the AHB. The results can be seen in the picture below. The pulse was triggered on the rising edge. Even though the time between rising and falling edge should be the same, it is not. Worst case scenario observed was 192 ns from rising to falling edge, instead of the 42 ns that was the best case. It's interesting to note that most of the time pulse with was 60 ns, while in two instances, the pulse with was less than this (42, and 52 ns). The slots seems to fall into discrete positions correspondins with 10 ns slots, (a bus frequency of 100 MHz) File:SDS5034X PNG 76.png

By intentionally generating traffic on the AHB/APB set aside for CPUS it is possible to create crashes. This will manifest in a similar way to how latency is made on the PIO. By default, there is only one peripheral that Linux needs to access, the R_RSB. Below the is the result of this. A small infinite loop was created to run on the AR100 and the scope was set up to measure the pulses with infinite persistence. File:SDS5034X PNG 78.png

The Reduced Serial Bus peripheral has only one instance, so it can not be moved to different pins. There are some possible workarounds:

- Don't use it. Once the PMIC (the only slave on the RSB) is set up, it does not need further configuration. It seems Linux sets up polling of some registers, but it might not be needed.

- Make a soft/bitbang implementation on different pins. This will require a hardware change from rev A2.

- Live with the possibility of a delayed pulse. The error seems to be in the order of 18 nanoseconds, not important for a 3D-printer.

In comparison, a 16 MHz AVR has an instruction cycle time of 62.5 ns. If a step is one clock cycle off, that is much worse than package a collision on an AR100.

Data transfer from CPUX to CPUS

In order to make data available for the CPUS to consume, it's good to reserve a region in memory for the CPUX to write to and for the CPUS to read from. This can be either DDR3 memory (plentyful, but slow and unpredictable) or SRAM (much less memory available, but requires 3 cycles to read by the CPUS).

As a first approach, it is suggested to reserve a region in SRAM A2 for the shared data.

SRAM A2

The SRAM A2 has a total size of 0x10000 bytes, 64 K. The bottom part of the memory space is reserved for ATF (Arm Trusted Firmware), so in reality only 0x4000 bytes (16 K) is usable for firmware and shared memory. Preliminary tests with a simple program shows that 0x1800 (6 K) is used for starting a simple program. The standard Crust uses 0x400 bytes for stack, which might not be needed. That leaves some 0x2500 bytes, (just under 10 K) for shared memory. Each pin-state, delay requires 8 bytes (without optimization) giving a total of 1184 transitions.

SRAM A1

SRAM A1 has a size of 32 K, the memory is not in use during normal operations. See below for benchmarks on latency for data read. The base address as seen from AR100 is 0x40000, this is not well documented.

S_UART to UART bridge

An alternative implmementation that is compatible with klipper out of the box is the use of is using a UART for sending commands and connect the pins to S_UART for catching the traffic in the AR100. This has the added benefit of not generating any traffic on the CPUS AHB.

Msgbox

There is a message box peripheral included in the SoC. There is mainline support of the msg box starting from 5.8, but the current stable kernel is 5.4

Programmable Timer Interrupts

For using the AR100 with Klipper, a good interrupt driven timer is needed.

R_timer

From the documentation of the A83T, which is very similar to the A64, the R_timer can only be clocked by the 24 MHz crystal and not by the 300 MHz periph clock. This might be problematic because it limits the speed for the steppers. There seems to be a high speed timer which can be synced to the AHBCLK mentioned in the A83T user manual, but that is then distanced by at least one arbiter and might be used by Linux.

Tick Timer Facility

The OR1000 specifies a counter register which is synced to the CPUs clock. According to the OR1000 documentation:

TTCR is incremented with each clock cycle and a tick timer interrupt can be asserted whenever the lower 28 bits of TTCR match TTMR[TP] and TTMR[IE] is set.

It's not ideal that the compare register is only 28 bits wide, but it can either be software extended or use a general purpose register for comparing values.

Benchmarks from ar100-info

Included is the results from running the ar100-info program: [1]

OpenRISC-1200 (rev 1)

D-Cache: no

I-Cache: 4096 bytes, 16 bytes/line, 1 way(s)

DMMU: no

IMMU: no

MAC unit: yes

Debug unit: yes

Performance counters: no

Power management: yes

Interrupt controller: yes

Timer: yes

Custom unit(s): no

ORBIS32: yes

ORBIS64: no

ORFPX32: no

ORFPX64: no

Test support for l.addc...yes

Test support for l.cmov...yes

Test support for l.cust1...no

Test support for l.cust2...no

Test support for l.cust3...no

Test support for l.cust4...no

Test support for l.cust5...no

Test support for l.cust6...no

Test support for l.cust7...no

Test support for l.cust8...no

Test support for l.div...yes

Test support for l.divu...yes

Test support for l.extbs...yes

Test support for l.extbz...yes

Test support for l.exths...yes

Test support for l.exthz...yes

Test support for l.extws...yes

Test support for l.extwz...yes

Test support for l.ff1...yes

Test support for l.fl1...yes

Test support for l.lws...no

Test support for l.mac...yes

Test support for l.maci...yes

Test support for l.macrc...yes

Test support for l.mul...yes

Test support for l.muli...yes

Test support for l.mulu...yes

Test support for l.ror...yes

Test support for l.rori...yes

Test timer functionality...OK

Set clock source to LOSC...done

Test CLK freq...27 KHz

Set clock source to HOSC (POSTDIV=0, DIV=0)...done

Test CLK freq...24 MHz

Set clock source to HOSC (POSTDIV=0, DIV=1)...done

Test CLK freq...12 MHz

Set clock source to HOSC (POSTDIV=1, DIV=1)...done

Test CLK freq...12 MHz

Setup PLL6 (M=1, K=1, N=24)...done

Set clock source to PLL6 (POSTDIV=1, DIV=0)...done

Test CLK freq...300 MHz

== Benchmark ==

== Code in SRAM A2 (I-cache ON), data in SRAM A2 ==

Instructions fetch : 1.0 cycles per instruction

Back-to-back L.LWZ : 3.0 cycles per 32-bit read

L.LWZ + L.NOP : 4.0 cycles per 32-bit read

== Code in SRAM A2 (I-cache ON), data in SRAM A1 ==

Instructions fetch : 1.0 cycles per instruction

Back-to-back L.LWZ : 3.0 cycles per 32-bit read

L.LWZ + L.NOP : 15.9 cycles per 32-bit read

== Code in SRAM A2 (I-cache OFF), data in SRAM A2 ==

Instructions fetch : 3.0 cycles per instruction

Back-to-back L.LWZ : 4.5 cycles per 32-bit read

L.LWZ + L.NOP : 8.0 cycles per 32-bit read

== Code in SRAM A2 (I-cache OFF), data in SRAM A1 ==

Instructions fetch : 3.0 cycles per instruction

Back-to-back L.LWZ : 16.0 cycles per 32-bit read

L.LWZ + L.NOP : 19.3 cycles per 32-bit read

Set clock source to PLL6 (POSTDIV=2, DIV=0)...done

Test CLK freq...200 MHz

Set clock source to PLL6 (POSTDIV=2, DIV=1)...done

Test CLK freq...100 MHz

Setup PLL6 (M=2, K=1, N=24)...done

Test CLK freq...100 MHz

Setup PLL6 (M=1, K=2, N=24)...done

Test CLK freq...75 MHz

Setup PLL6 (M=1, K=1, N=12)...done

Test CLK freq...52 MHz

Restore clock config to original state...done

All tests completed!